Documentation

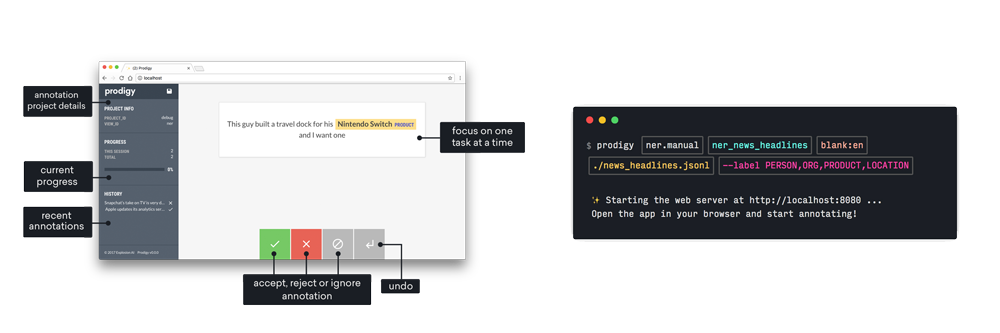

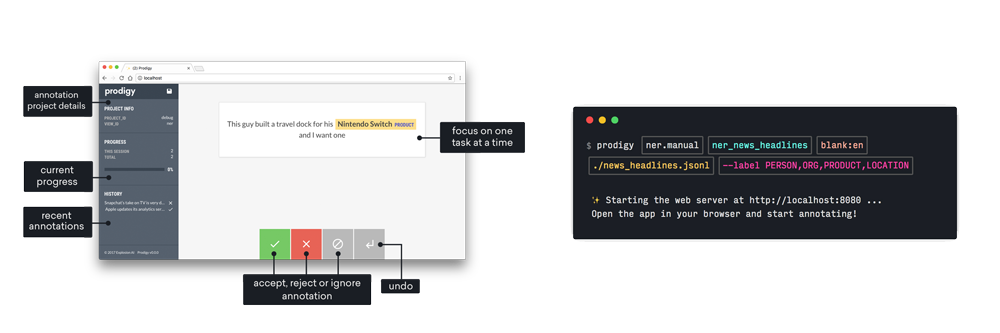

- Downloadable developer tool and library

- Create, review and train from your annotations

- Runs entirely on your own machines

- Powerful built-in workflows

Prodigy’s novel prompt tournament workflow gives you a reliable and quantifiable way to to evaluate your prompts on real-world data and determine which prompts perform best for your specific use case. Collaborate on prompt engineering and see the results in real time.

Prodigy lets you build entirely custom workflows and interfaces using any model in the loop. Prodigy can transform the model’s response into consistent, structured data. Develop your prompts in Prodigy’s intuitive web interface and query the model live as you make changes to see instant results.